Earlier this month, we announced Autopilot, an AI coding assistant designed to help you build internal tools faster than ever. Now that Autopilot is in public beta, we would like to share how we built Autopilot and the lessons we learned along the way.

Large Language Models (LLMs) are extremely good at unstructured, creative tasks. We have all seen this firsthand telling ChatGPT to write sonnets about your cat. However, when it comes to building an AI product that drives real-world value to your customers, LLMs are far from plug-and-play. In this post, we’ll share our learnings to enable you to build your own AI assistant. At a high level:

- Use heuristics and intermediate LLM calls to build context

- Reduce ambiguity and manage your complexity effectively

- Design your infrastructure for a great user experience and fast iteration

Many of the code examples shown are written in Go since that’s what Airplane uses.

What is Autopilot?

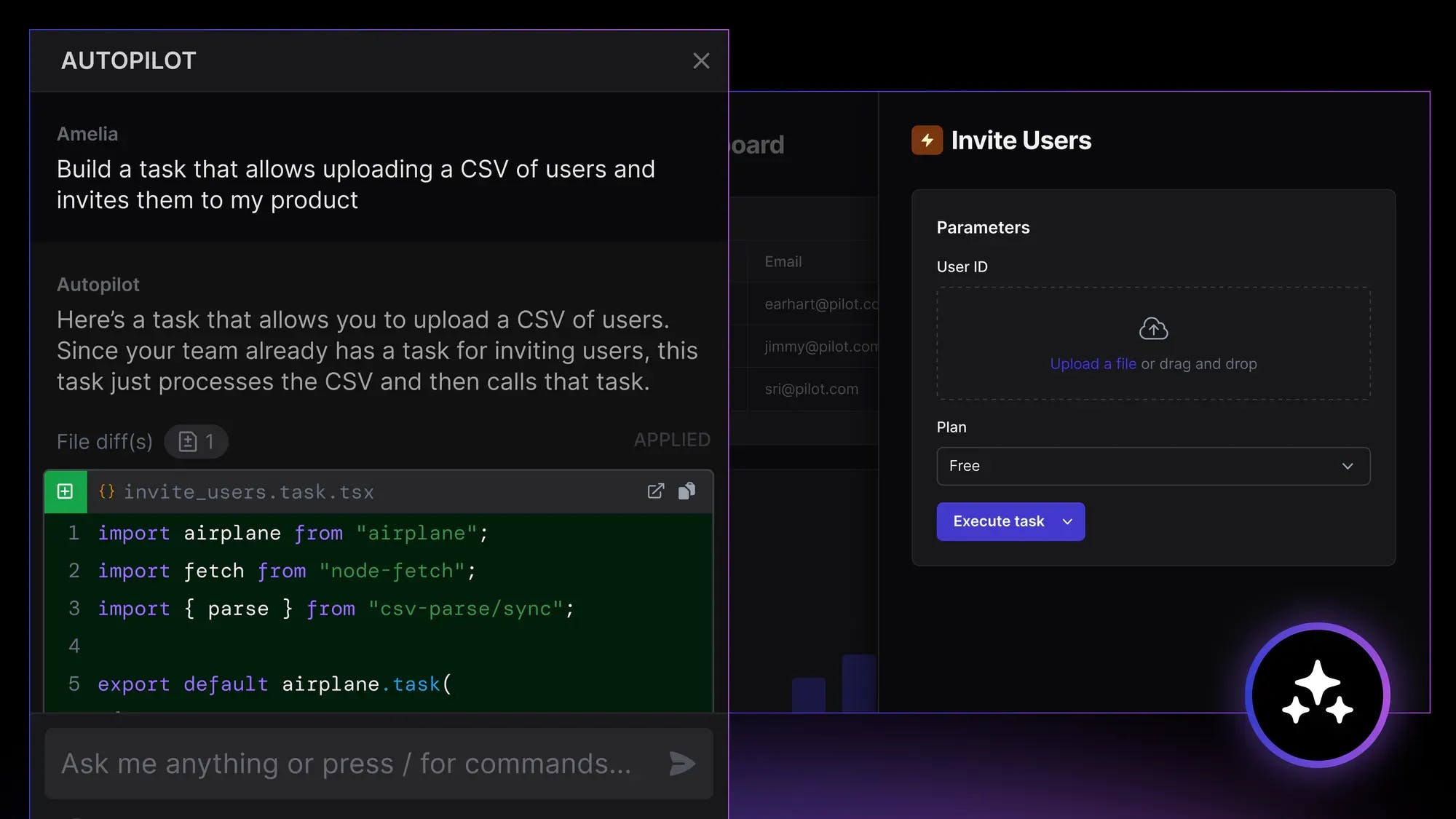

Before diving into the details, let's first discuss what Autopilot is and how it enhances your development experience in Airplane. With Airplane, you can transform scripts, queries, and APIs into custom UIs and workflows. Autopilot has a deep understanding of Airplane’s concepts and features allowing it to:

- Create and edit Tasks, functions that anyone on your team can execute

- Create and edit Views, a React component library that allows you to build UIs on top of Tasks

- Debug issues and respond to questions

- Explain concepts and code

- Get instant responses for support tickets

Here’s a quick 4-minute tutorial that walks through how to build an end-to-end customer orders dashboard using Autopilot. For more details, see the Autopilot documentation.

Now, let’s move on to the lessons!

Context is all you need

Without sufficient context, LLMs may resort to guessing and making assumptions, which can lead to potential inaccuracies and irrelevant suggestions. Large and rich contexts help increase accuracy but are expensive and slow. This makes providing the entirety of your product’s concepts infeasible. This problem is popularly addressed with retrieval augmented generation where you dynamically search a knowledge base, often via embeddings, to determine what context should be provided to your LLM.

Given Airplane's large feature set, supplying the complete context for all features is impossible. Luckily, we have always invested heavily into our public documentation which is the perfect knowledge base for our prompts. We wrote a Python script that crawls our documentation, splits it into chunks, generates embeddings, and stores the chunks in a PostgreSQL database for easy querying. Since the scale of our documents is relatively small, we didn’t need a dedicated vector database.

Converting documentation with rich components into well-formatted, LLM-friendly text is non-trivial. Out of the box HTML to text functions (e.g. html2text) often provided malformed results, especially for our more complicated components. To make parsing easier, we annotated our components with additional class names.

We also save additional metadata for each document, e.g. the topic of the page and the code examples’ language. When we search these documents, we can then select from a subset of documents with a specific topic or return documents with code examples of irrelevant languages filtered out. Our documentation often contains code examples in multiple languages. If we can determine that the user is only writing Python, we don’t want to surface Typescript code examples. We have found that these additional filters provide significantly better results versus out-of-the-box vector search.

In addition to document search, we also use a mix of simple heuristics and LLM calls to provide additional context. If a user is editing code, we can use a simple regex search to determine which parts of the Airplane SDK they are using and provide the SDK function definitions. If their task is using a database resource, we include the schema of their tables.

Intermediate LLM calls are also useful to determine context that is difficult to determine statically. For example, if a user is trying to create a View, it’s not obvious what components might be needed. Luckily, it is very simple and fast to ask a small, relatively fast LLM such as GPT-3.5. We can provide the LLM with a description of each component and ask it to choose which components might be relevant.

Then when we go to write the full code of the View, we can provide the documentation for only the relevant components.

Reducing ambiguity and managing complexity

Complex prompts and ambiguous context require LLMs to guess and infer your intent. Too much complexity and ambiguity lead to hallucinations and dramatically worse performance. Even though larger LLMs (GPT-4, Claude-2) have larger complexity budgets, reducing the amount of inference needed produces significantly better results. Being as straightforward as possible allows the models to spend their complexity budget on other tasks, such as writing higher-quality code.

With Autopilot, we aim to reduce prompt complexity by using a large number of static rules and heuristics to infer intent upfront. We can filter out which Airplane resources the user might need to save the LLM from also needing to determine that when writing code. Instead of relying on an LLM to determine what language the user wants to use, we can just see what files they have in their project.

We use intermediate prompts to remove irrelevant information and determine intent. A smaller, faster model (e.g. GPT-3.5) allows us to first select which database tables may be relevant to the user. Then, we can directly provide metadata only for those tables when writing our response in the next prompt.

Since Autopilot is integrated into Studio, Airplane’s local development experience, we can leverage additional context from what files the user has open, where their cursor is, and what they have selected in their editor to infer their intent. If the user has no files, we can safely assume they are not trying to edit code. We can then restrict the LLM from calling any code editing functionality.

Infrastructure choices for great UX and fast iteration

In this section, we'll delve into some of the infrastructure design choices we made while developing Autopilot. We'll discuss the importance of a strong prompt testing framework, the user experience benefits of streaming outputs, and the cost and performance optimization achieved through dynamic selection of LLMs.

Implement a strong prompt testing framework

Having a good prompt testing framework allows you to confidently improve your prompts, add new features, and run experiments. We decided to build our own testing framework instead of relying on a third party. Doing so allowed us to more smoothly integrate with our existing tooling. The overall simplicity of the testing framework did not necessitate a dedicated service. Our tests are defined as code and support regex assertions, run code linters (e.g. pylint, tsc), checks for entity definitions, and much more.

Our prompttest.go binary also has the option to output the raw LLM inputs. This makes it very easy to make tweaks in online LLM playgrounds and test out different ideas.

Stream outputs for an improved user experience

Streaming outputs provides a significantly improved user experience. This is especially important if your use case includes generating large response payloads. Reducing time to first response is very important to make your assistant feel responsive.

We designed Autopilot’s infrastructure with a focus on streaming as a primary feature. Each LLM invocation immediately returns an Accumulator object that contains the response.

Accumulator objects are easily passed around our chain of prompts. If a downstream prompt requires the output of a previous prompt, it can call .Value() and block on the result. The response from the last prompt in the chain is flushed to the client asynchronously, allowing us to stream outputs to the user.

One of the challenges with streamed outputs is differentiating structured content. When editing Tasks or Views with Autopilot, we need to associate the edit suggestion with the target file. To do so, we could try to get the LLM to include the file name in a custom format that we then have to parse. This is suboptimal in two ways. Firstly, it increases the complexity of our prompt which, as mentioned in the earlier section, results in lower overall quality. Secondly, the chosen custom format has to be parsed in the response stream, introducing a lot of room for error.

For Autopilot, we know the file name to edit ahead of time. Instead of relying on the LLM to correctly include the file name in a custom format, we ask the LLM to return code blocks that we then map onto file objects. Since the language models are very familiar with markdown, the performance is extremely consistent.

Dynamically select LLMs for cost and performance optimization

LLMs vary greatly by cost and performance. GPT-4 consistently produces higher quality responses than GPT-3.5 does but is also much more expensive and slower. With Autopilot, our many prompts have different requirements for how fast and accurate the responses need to be. For example, a prompt used in the middle of a prompt chain used to determine what documentation is needed to write code has to be very fast. The user won’t be able to see code being written until the previous prompt is fully completed. And so, we choose to use a faster GPT-3.5 model to do so. For the actual code writing prompt, we choose to use GPT-4 for its increased accuracy. Since we can stream the response, the increased latency matters less.

Every Autopilot prompt dynamically selects an LLM based on a default model (e.g. GPT-4), the prompt kind (e.g. Python task creation), and a guess at the maximum tokens to be used. This allows us to pick the best model for each different use case. We can choose models depending on the user’s team or have per-model rate limits that switch to a cheaper model if a specific team is too active. By annotating each LLM request with the prompt kind, our testing framework allows us to easily test LLMs for each prompt via per prompt overrides.

With the speed of LLM progression, it is extremely important to be able to switch models. At Airplane, we are constantly evaluating new models. Once something better or cheaper comes up, switching LLMs is just a few lines of code!

The future of Autopilot

Now that Autopilot is in public beta, our focus is on integrating AI into more aspects of our product. Soon, you’ll be able to use Autopilot directly in the Studio editor, in the Task / View creation process, and much more! We have built strong AI foundations over the last few months and are very excited to integrate these building blocks everywhere. We will continue to tune our prompts and begin experimenting with fine-tuned models. For Autopilot, the chat assistant is just the beginning!

And again, Autopilot is available for everyone to use in Studio today! To try it out, you can get started with Airplane for free or book a demo with our team. We'd love to hear any feedback or requests at [email protected].